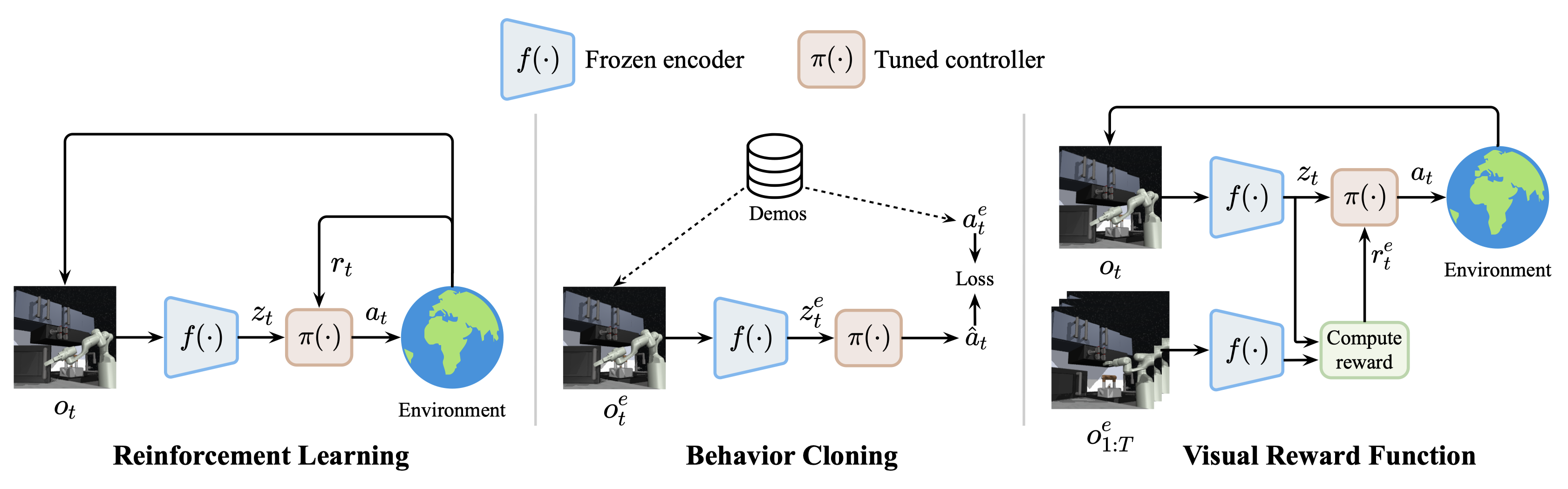

We consider 3 policy learning algorithms: (i) reinforcement learning (RL), (ii) imitation learning through behavior cloning (BC), and (iii) imitation learning with a visual reward function (VRF). The first two approaches (RL and BC) are widely used in the existing literature and treat pre-trained features as representations that encode environment-related information. The last approach (VRF) is an inverse reinforcement learning (IRL) paradigm we adopt which requires that the pre-trained features also capture a high-level notion of task progress, an idea that remains largely underexplored.

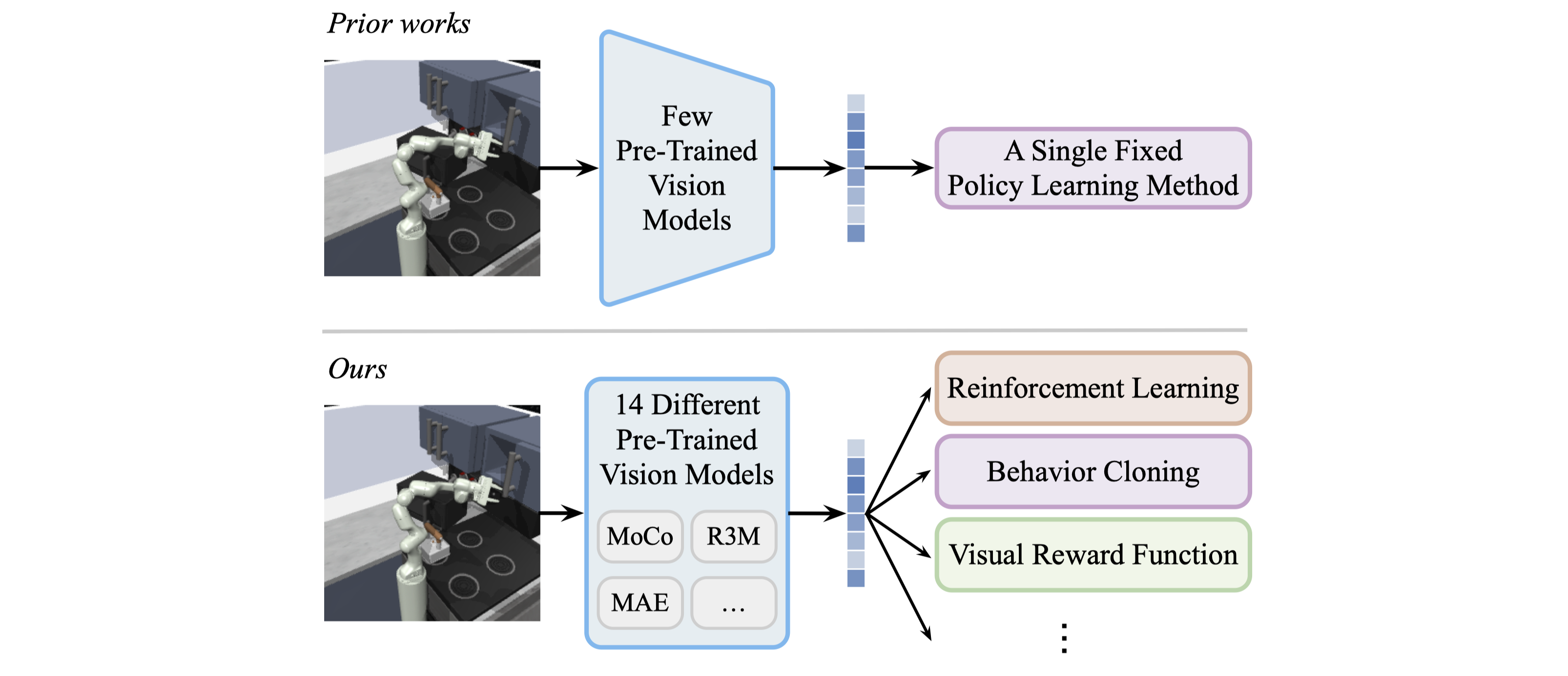

We investigate the efficacy of 14 "off-the-shelf" pre-trained vision models covering different architecture (ResNet and ViT) and prevalent pre-training methods (contrastive learning, self-distillation, language-supervised and masked image modeling).

| Model | Highlights |

|---|---|

| MoCo v2 | Contrastive learning, momentum encoder |

| SwAV | Contrast online cluster assignments |

| SimSiam | Without negative pairs |

| DenseCL | Dense contrastive learning, learn local features |

| PixPro | Pixel-level pretext task, learn local features |

| VICRegL | Learn global and local features |

| VFS | Encode temporal dynamics |

| R3M | Learn visual representations for robotics |

| VIP | Learn representations and reward for robotics |

| MoCo v3 | Contrastive learning for ViT |

| DINO | Self-distillation with no labels |

| MAE | Masked image modeling (MIM) |

| iBOT | Combine self-distillation with MIM |

| CLIP | Language-supervised pre-training |

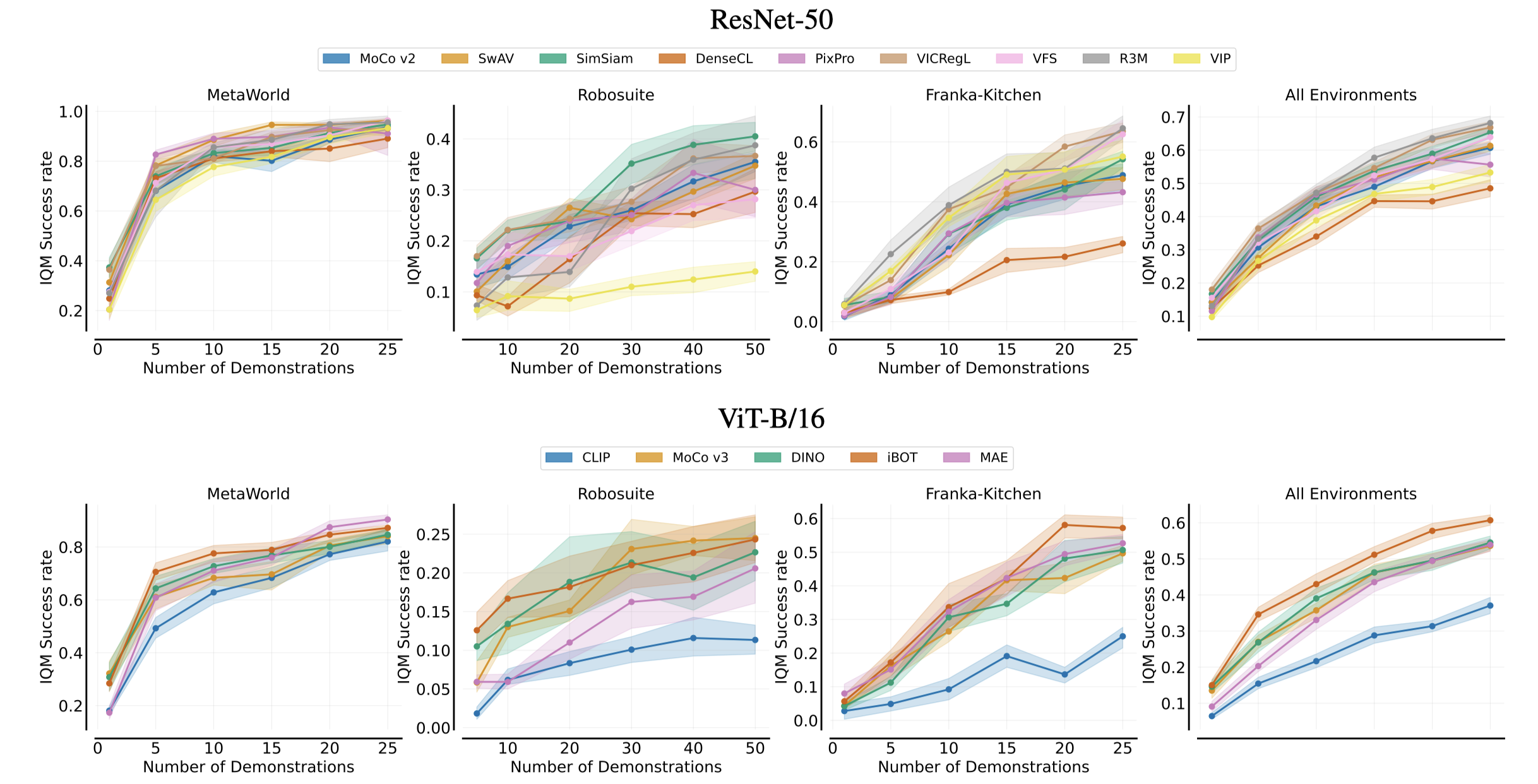

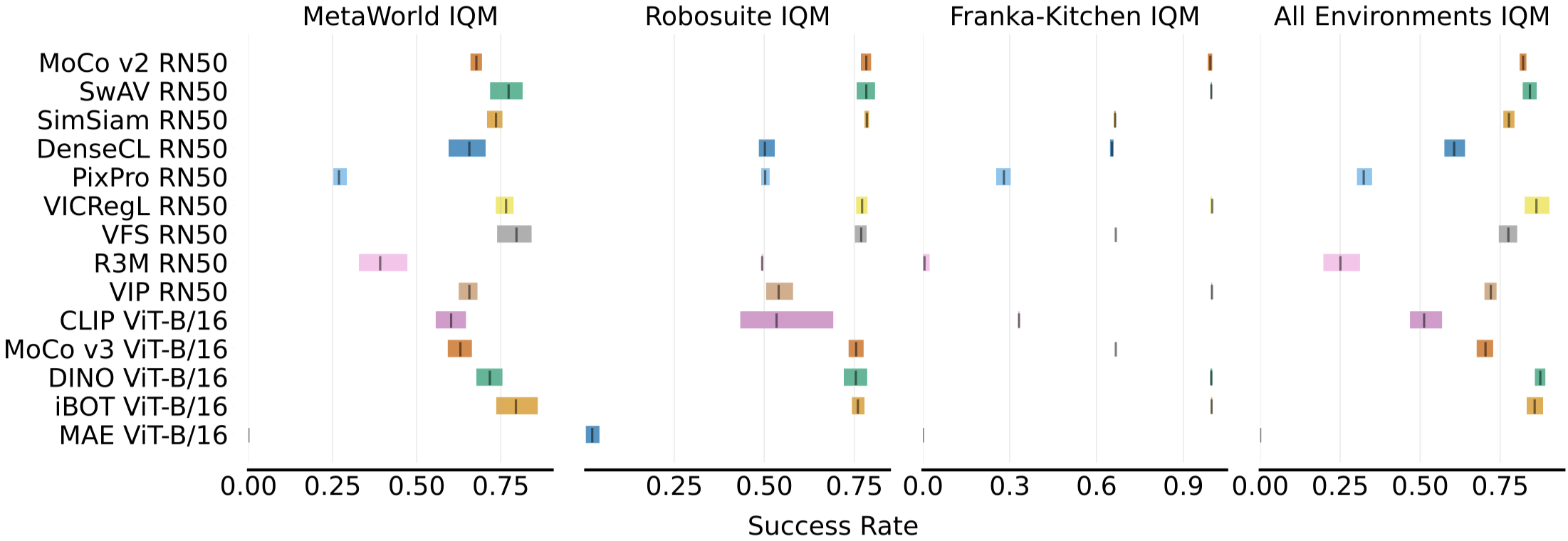

We run extensive experiments on 21 simulated tasks across 3 robot manipulation environments: Meta-World (8 tasks), Robosuite (8 tasks), and Franka-Kitchen (5 tasks).

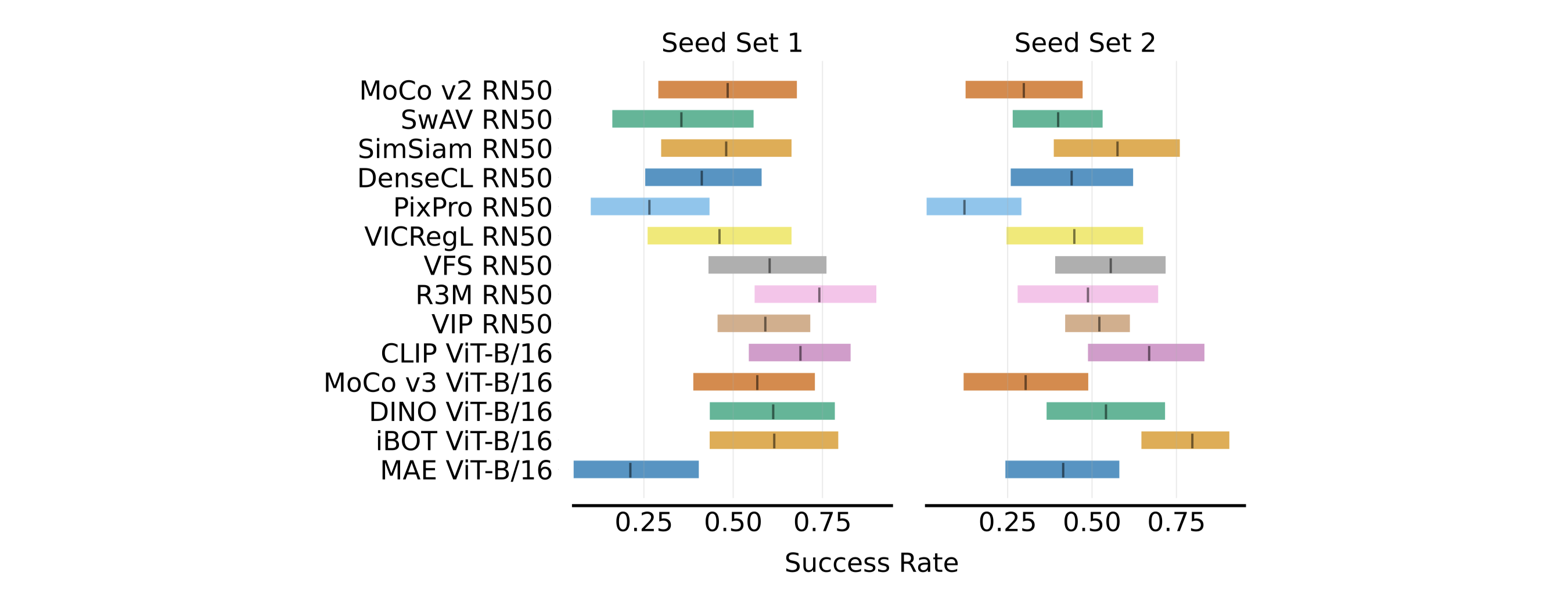

We observe significant inconsistency in RL results across different training seeds. This high variability can be attributed to the inherent randomness stemming from several sources such as exploratory choices made during training, stochasticity in the task, and randomly initialized parameters. Therefore, RL itself is not suitable as a downstream policy learning method to evaluate different pre-trained vision models.

Without control-specific adaptation, BC still benefits from the latest benchmark-leading models in the vision community (e.g. VICRegL and iBOT), due to their inherent ability to capture more environment-relevant information such as object locations and joint positions.

Different vision models yield the most consistent performance when using VRF, which requires the vision model to learn global features and capture a notion of task progress. MAE is a noticeable underperforming outlier, likely due to the fact that it suffers from the anisotropic problem.

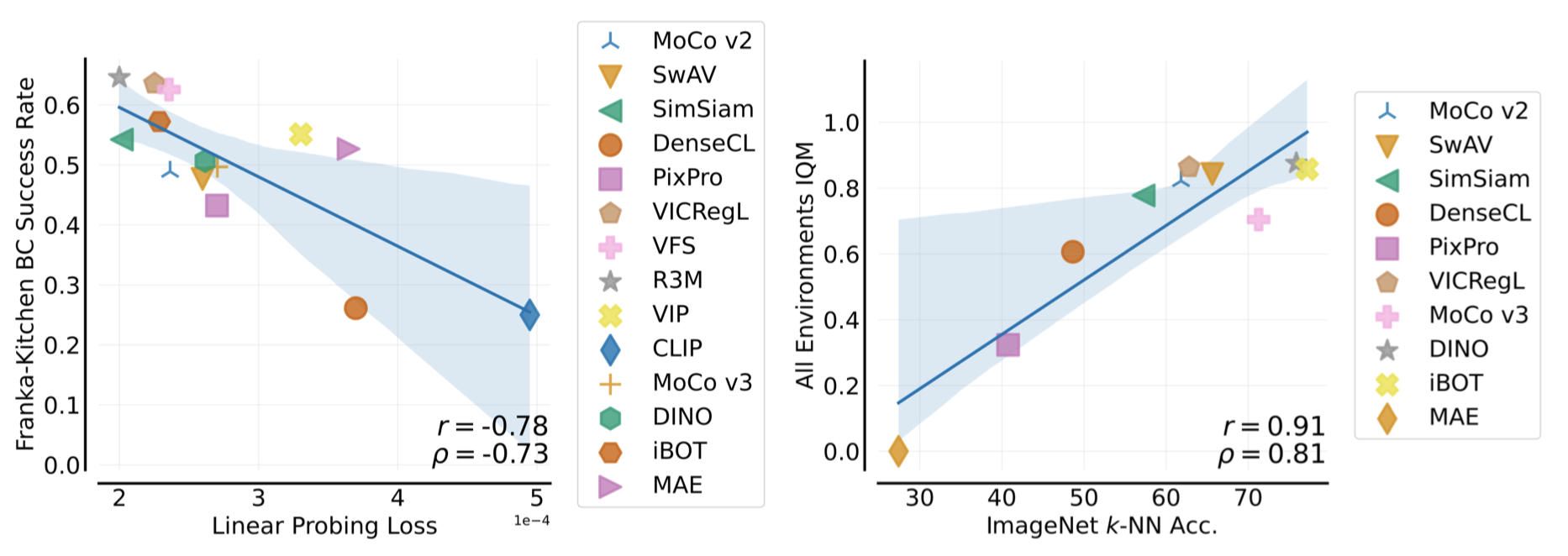

We observe a strong inverse correlation between linear probing loss and BC success rate, suggesting that the linear probing protocol can be a valuable and intuitive alternative for evaluating vision models for motor control. We further find a metric that is highly predictive of VRF performance: ImageNet k-NN classification accuracy.

@article{hu2023pre,

title={For Pre-Trained Vision Models in Motor Control, Not All Policy Learning Methods are Created Equal},

author={Hu, Yingdong and Wang, Renhao and Li, Li Erran and Gao, Yang},

journal={arXiv preprint arXiv:2304.04591},

year={2023}

}